This blog helps to understand Kubernetes Red/Black deployments with Spinnaker and Traffic Shaping through Istio.

Kubernetes deployment strategies

Kubernetes supports declarative rollout strategy with with management objects like deployments and replica-sets. Some of the commonly used rollout strategies are as follows:

Recreate

Terminates the current running version and replaces with the new version.

spec:

replicas: 10

strategy:

type: Recreate

Rolling Update

- Creates a new version instance and after the successful creation of new version, terminates older version. This strategy can be tuned using maxSurge and maxUnavailable percent to control the rollout.

- For example, if the minimum availability requirement is 10 instances then the rolling update will start by creating 1 new instance, and after the new instance is ready then one instance of the older version is removed. So, for brief period there are 11 instances of the pod running.

- Now, if the maxSurge is set to 50%, then there will be 5 new instance created immediately. As the new instances become ready, an old instance is terminated and an instance of new version is created until all the 10 instances are running. Once all 10 new instances are running then all the old instances are terminated.

- If maxUnavailable is set to 50% then it is acceptable to have only 5 active instances at any time. So, 5 old instances will be terminated immediately after rollout and 5 new instances will be created. After a new instance is ready, an existing older instance will be deleted and another new instance is started. This continues until all 10 instances are new.

spec:

replicas: 10

strategy:

type: RollingUpdate

maxSurge: 10%

maxUnavilable: 10%

Red/Black (Blue/Green)

- Kubernetes natively does not support red/black rollout strategy. To implement red/black, one has to create a new deployment with a different name and then manage the service traffic outside. It is also the responsibility of the deployment agent to remove older deployments.

- Label definition for the pods in the deployment or replicaset to manage the pods:

spec:

template:

metadata:

labels:

application: restapp

version: 1.0

- Service selector to route traffic to version 1.0 of restapp:

kind: Service

spec:

selector:

application: restapp

version: 1.0

- Service selector to route traffic to version 2.0 of restapp:

kind: Service

spec:

selector:

application: restapp

version: 2.0

- If the new version deployed has a failure then rolling back is as simple as setting the service spec as follows:

kind: Service

spec:

selector:

application: restapp

version: 1.0

- After the traffic switch, the user can remove the deployment to version 2.0.

Canary Deployment

- Canary deployment is a rollout strategy which deploys a new version of service for a small amount of traffic. After verification of functional and performance of newer version for small traffic, then the deployment can proceed using the deployment strategies outlined above.

kind: Deployment

metadata:

name: restapp-canary

spec:

replicas: 1

template:

metadata:

labels:

app: restapp

version: 2.0

kind: Service

spec:

selector:

application: restapp

- Notice that the service selector does not specify a specific version as it did in the red-black strategy. Hence, the traffic will be routed to both version 2.0 and 1.0 except there is only one instance of 2.0. Once, verified the deployment to original restapp can proceed with RollingUpdate or red-black.

- Using service mesh like Istio can control the traffic irrespective number of instances as you will see later in this document.

Spinnaker support for deployment strategies

- Spinnaker supports abstraction of Kubernetes API called V1 which generated manifest files with replicaSet deployments based on the user values specified by user. Spinnaker V2 on the other hand natively supports Kubernetes API using kubectl. Spinnaker supports multiple ways of hydrating manifest files for dynamic configuration of deployments.

- Heml templates with user specified values, expresion language (SPeL) for hydrating templates and artifact substitution with additional checks on triggered or queried data is supported https://spinnaker.io/docs/reference/providers/kubernetes-v2/

Spinnaker support for red-black for Kubernetes V2

- Spinnaker makes it easy to manage red-black deployments by managing the life cycle of the rollouts and automating the traffic management with annotations.

- This functionality requires a replicaset based deployment to keep the life cycle management simple to manage. The kind deployment is going to create a replicaset with a new name for every rollout. This makes managing history and traffic management to be done both at deployment as well as replicaset which is redundant.

- Red-black strategy implementation requires a service that will route traffic to the pods with a selector that does not match the replicaset selector. A replicaset definition with pod spec managing the life cycle of the pods.

apiVersion: v1

kind: Service

metadata:

name: frontend

spec:

ports:

- name: http

port: 9080

selector:

type: frontend

- Consider the following rollout with a replicaSet:

apiVersion: apps/v1

kind: ReplicaSet

metadata:

annotations:

strategy.spinnaker.io/max-version-history: '2'

traffic.spinnaker.io/load-balancers: '["service frontend"]'

name: restapp

spec:

selector:

matchLabels:

application: reviews

template:

metadata:

labels:

application: restapp

- There are two annotations that are added to the replicaset definition. The annotation traffic.spinnaker.io/load-balancers will patch the selector fields of replicaset with the service (front end in this case) into the replicaset manifest.

- The second annotation, strategy.spinnaker.io/max-version-history specifies the number of versions of deployments to keep. In this case, at most two deployments will be present and anything older will be deleted.

- Notice that the selector for service does not match the labels of the replicaset pods. This is important because when Spinnaker disables a replicaset, it removes the replicaset from the traffic path of service by removing the selectors of the service from replicaset.

- If the selectors for service and the replicaset are exactly the same, then removing selector from replicaset will remove management of pods by the replicaset making the pods orphan.

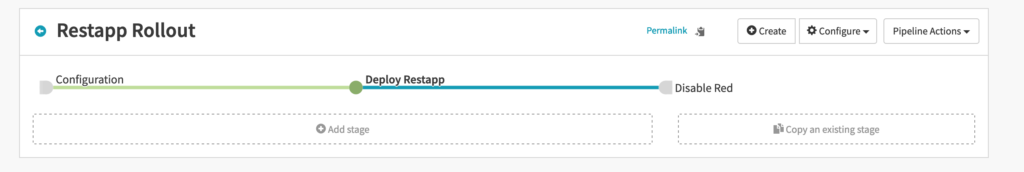

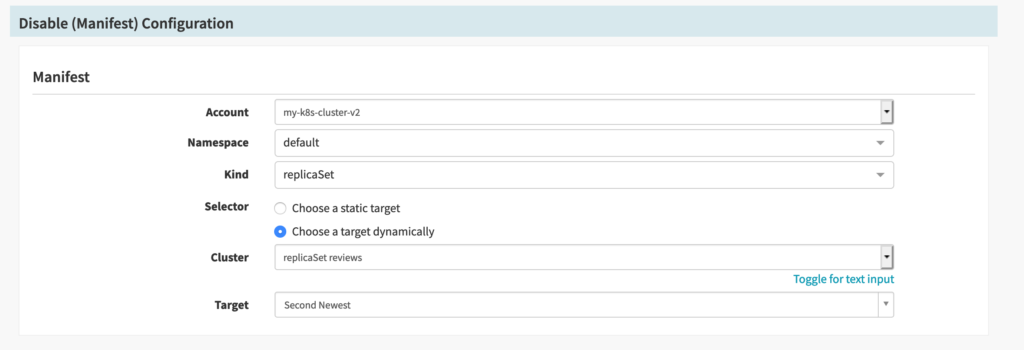

Configuring with Spinnaker

- Rollout a new version of restapp service using red-black strategy

- Settings for diasbling the previous version after rolling out the new version

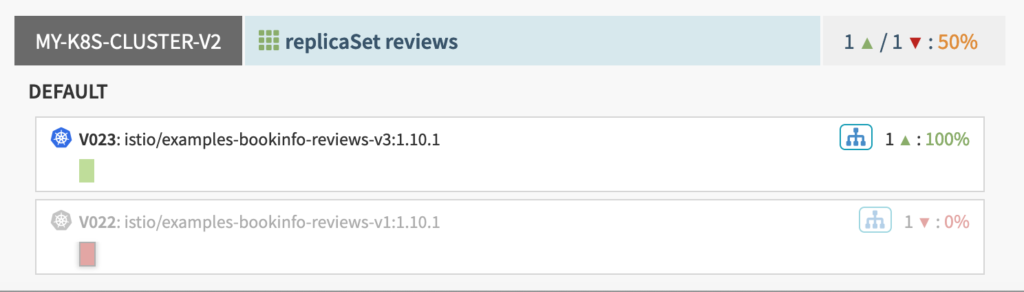

- CLuster view in Spinnaker after rollout of service with red-black strategy

Istio based traffic management

- Istio is an open source implementation of a service mesh that provides traffic management capabilities along with service discovery, monitoring etc for distributed applications. Istio supports traffic management based on routing rules for services that can be percent of incoming traffic or rules based on request headers or paths.

- Istio configuration can be made using manifests spec of CRDs created by Isito deployment. Spinnaker supports CRDs as part of the pipeline execution. Using Isito CRD manifests for Virtual Service and Destination Rules one can achieve A/B testing, canary analysis and blue/green deployment analysis. We will look at simple implementation for canary analysis using Spinnaker and Istio for Kubernetes deployments.

Managing destination rules

- Destination Rules are setup in Istio to identify a service based on its metadata. From bookinfo example of Istio, destination rules for reviews requests are routed to reviews service for v1 and v2 identified by subnet names of v1 and v2 respectively. The routing load balancing policy is set to RANDOM selection from the two subnets.

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: reviews

spec:

host: reviews

trafficPolicy:

loadBalancer:

simple: RANDOM

subsets:

name: v1

labels:

version: v1

name: v2

labels:

version: v2

Setting up Virtual Service with routing rules

- A VirtualService in Istio can be used to modify the traffic distribution to the services in destination rules. The following snippet will route 90 percent of the incoming traffic to v1 subnet and rest to v2 subnet.

apiVersion: networking.istio.io/v1alpha3

kind: VirtualService

metadata:

name: reviews

spec:

hosts:

- reviews

http:

route: destination:

host: reviews

subset: v1

weight: 90

destination:

host: reviews

subset: v2

weight: 10

Canary deployments with Istio

- Canary analaysis is performed by handling small percent of production traffic by the new version being deployed to validate the functionality and performance of new version in the production environment before rolling out to entire deployment. As you can see from the VirtualService configuraiton example, a new version deployment can be done with small amount traffic handled by new version using VirtualService. When the validation is complete, one can increase the traffic handled by new version to 100 and then remove the previous version deployment.

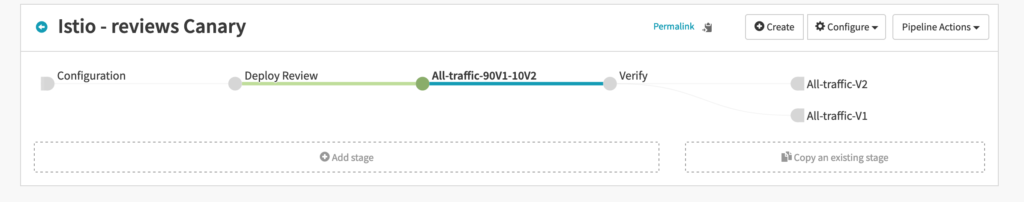

- In Spinnaker the pipeline would look as follows:

- Deploy Review stage deploys new version of review deployment and configures 10 percent of traffic to go to the new version. Verify stage is to manually or use automated verification techniques to validate the canary deployment. If the canary deployment is successful then the traffic is completely directed to new version, if not then the traffic is redirected back to the existing deployment version.

Red-black deployment with Istio

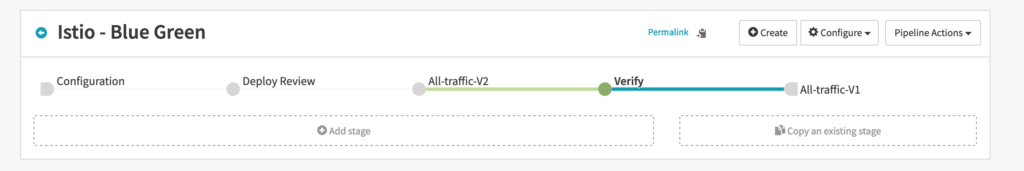

- Configuring for blue-green is very similar to the canary using Istio.

- Deploy Review stage deploys new version of review deployment and configures 100 percent of traffic to go to the new version. Verify stage is to manually or use automated verification techniques to validate the new deployment. If the new deployment is successful then there is nothing to do, if not then the traffic is redirected back to the existing deployment version.

Spinnaker roadmap for Istio support

- Spinnaker community is working on making traffic management using Istio part of Spinnaker with built support for rolling blue green and simplified automated analysis. Watch out for new features in this area soon.

About OpsMx

Founded with the vision of “delivering software without human intervention,” OpsMx enables customers to transform and automate their software delivery processes. OpsMx builds on open-source Spinnaker and Argo with services and software that helps DevOps teams SHIP BETTER SOFTWARE FASTER.

0 Comments

Trackbacks/Pingbacks