Introduction

What is blue-green deployment in DevOps?

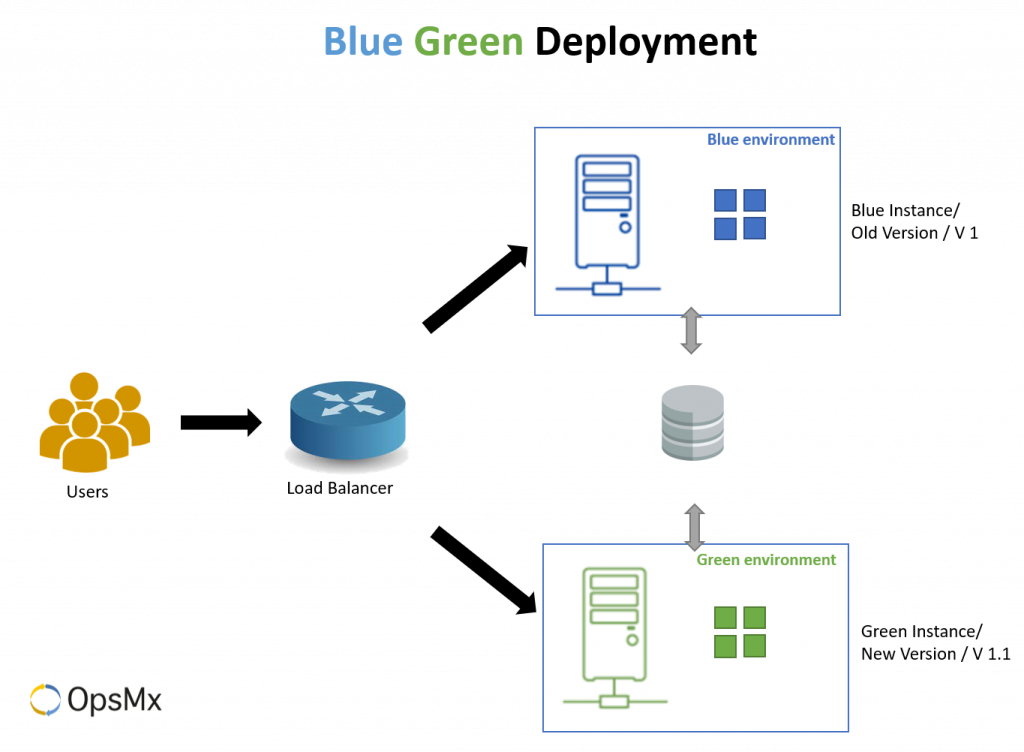

In software delivery, a blue-green deployment is a technique for releasing new software versions by maintaining two separate yet identical environments, called the blue and the green. The existing production environment is called the blue environment whereas the new version of the software is deployed to the green environment. Upon a thorough test and validation the green environment is switched to the production environment by routing traffic to the green environment. This makes the green environment the new blue environment. The former blue can be taken down once the new Blue environment becomes stable.

Why is blue-green deployment useful?

The primary benefit of implementing Blue-Green strategy is to ensure minimal or zero downtime with no end-user experience impact while deploying and switching users to a new software release or a rollback (in case there are unforeseen issues with the new release / deployment.

The concepts and components required to implement Blue-Green deployments include, but are not limited to load balancers, routing rules, and container orchestration platforms like Kubernetes.

How does Blue-green deployment work?

The two environments (blue and green)

As shown in the image, let us assume that version 1 is the current version of the application and we want to move to the new update, version 1.1. Version 1 will be called a blue environment and version 1.1 will be called the green environment.

The process of switching traffic between the two environments

Now that we have two instances named Blue and Green, we want users to be able to access the new green (v 1.1) instance rather than the older Blue instance. For this to happen, we normally use a load balancer instead of a DNS record exchange because DNS propagation is not instantaneous.

This is where load balancers and routers help in switching users from the Blue instance to the Green one. There is no need to change DNS records as the load balancer will still reference the same DNS record but routes new traffic to the Green environment. This allows us to be in full control of the users. Full control is desired because it will be necessary to quickly switch them back to version 1 (the blue instance) in case of a failure in a green instance.

Once the green instance (v1.1) is ready we move that into production and run it parallelly with the older version. With the help of their load balancer, traffic is switched from blue to green. Most users won’t even notice that they are now accessing a newer version of the service or application.

Monitoring

Once the traffic is switched from the blue to green instance, the DevOps engineers get a small duration of time to run smoke tests on the green instance. This is crucial as they need to figure out if there is an issue with the new version before users are impacted on a wide scale. This ensures that all aspects of the new versions are running as they should be.

Benefits of implementing Blue-green deployment

- Seamless customer experience: users don’t experience any downtime.

- Instant rollbacks: undo the change without adverse effects and go back to the previous best state.

- No upgrade-time schedules for developers: no need to wait for maintenance windows

- Testing parity: the newer versions can be accurately tested for real-world scenarios

The Blue-Green strategy is a perfect practice for simulating and running disaster recovery practices. This is because of the inherent equivalence of the Blue and Green instances and a quick recovery mechanism in case of an issue with the new release.

As we have seen in the case of Canary deployment, the testing environment may not be identical to the final production environment. In canary, we use a small portion of the production environment and move a small amount of traffic to the new system. But to simulate an actual production scenario a similar baseline instance is created that then is compared with the canary release. Read more about Canary Analysis here.

Gone are the days when DevOps engineers had to wait for low traffic windows to deploy the updates. This eliminates the need for maintaining downtime schedules and developers can quickly move their updates into production through the Blue-Green strategy, as soon as they are ready with their code.

Best practices of implementing Blue-green deployment

Choose load balancing over DNS switching

Do not use multiple domains to switch between servers. This was a very old way of diverting traffic. DNS propagation takes from hours to days. It can take browsers a long time to get the new IP address. Some of your users may still be served by the old environment.

Instead, use load balancing. Load balancers enable you to set your new servers immediately without depending on the DNS. This way, you can ensure that all traffic is served to the new production environment.

Keeping databases in sync

One of the biggest challenges of blue-green deployments is keeping databases in sync. Depending on your design, you may be able to feed transactions to both instances to keep the blue instance as a backup when the green is live. Or you may be able to put the application in read-only mode before cut-over, run it for a while in read-only mode, and then switch it to read-write mode. That may be enough to flush out many outstanding issues.

Backward compatibility is of utmost importance when business is very critical. Any new users or data on the new version must have access in the event of a rollback. Otherwise, the business might stand a chance to lose out on new customers

Execute a rolling update

The container architecture has enabled the use of a rolling or a seamless blue-green update. Containers enable DevOps engineers to perform a Blue-green update only on the required pod. This decentralized architecture ensures that other parts of the application do not get affected.

Challenges of implementing Blue-green deployment

Errors when changing user routing

Blue Green is the best choice of deployment strategy in many cases, but it comes with some challenges. One issue is that during the initial switch to the new (green) environment, some sessions may fail, or users may be forced to log back into the application. Similarly, when rolling back to the blue environment in case of an error, users logged in to the green instance may face service issues.

With more advanced load balancers these issues can be overcome by slowing moving new traffic from one instance to another. The load balancer can either be programmed to wait for a fixed duration before users are inactive or force close sessions for the users still connected to the blue instance post the specified time limit. This might slow down the deployment process and may result in some failed and stuck transactions for a very small fraction of the users. But this will provide an overall seamless and uninterrupted service quality as compared to the method where routers force the exit of all users and divert traffic.

High infrastructure costs

The elephant in the room with Blue-Green deployments is the infrastructure costs. Organizations that have adopted a Blue-Green strategy need to maintain an infrastructure that doubles the size required by their application. If you utilize elastic infrastructure, the cost can be absorbed more easily. Similarly, Blue-Green deployments can be a good choice for applications that are less hardware intensive.

Code compatibility

Lastly, the Blue and Green instances live in the production environment so developers need to ensure that each new update is compatible with the previous environment. For example, if a software update requires changes to a database (adding a new field or column for example,) the Blue Green strategy is difficult to implement because at times traffic is switched back and forth between the blue and green instance. It should be a mandate to use a database that is compatible across all software updates (as some NoSQL databases are).

Blue-green vs other deployment strategies

Refer to our advanced deployment strategy e-book for more information.

Conclusion

Blue-Green Strategy involves cost but is one of the most widely used advanced deployment strategies. Blue-green deployment is great particularly when you expect environments to remain consistent between releases, and reliability in user sessions across new releases.

OpsMx Intelligent Continuous Delivery (ISD) platform offers out-of-the-box support for blue-green deployments. Learn more about how OpsMx ISD addresses some of the real world challenges around software delivery and deployment and can help address your needs.

0 Comments

Trackbacks/Pingbacks